Behind the scenes of Wikipedia's image suggestions feature

2022-08-31 11:33:41 +0200 +0200My team at Wikimedia Foundation (the Growth team) recently completed an iteration of work on Wikipedia’s image suggestion feature.

We built the feature on top of a data pipeline, MediaWiki (the PHP framework behind Wikipedia), VisualEditor (MediaWiki’s WYSIWYG editor), and ElasticSearch. In this post, I’m going to describe how the pieces fit together.

But first–what does the feature do and why did we build it?

Helping newcomers get started with editing Wikipedia

The Growth team writes software to help retain newcomer editors and make it easier for new users to participate in Wikipedia communities.

One way we do that is with structured tasks: we break down complex editing processes and present them in step-by-step workflows that guide newcomers and are easy to do on mobile devices.

The “image suggestion” structured task presents users with articles that don’t have images. Our software offers a suggested image, asks the user to decide if it’s a good fit, and if so, the user can click a button to add it to the article.

Ideally the feature accomplishes two things:

- new users feel a sense of accomplishment in adding images to previously unillustrated articles

- the encyclopedia is improved for readers by having relevant images connected to articles

How it works

The starting point for this feature is /wiki/Special:Homepage, a prominent location for newly registered users on Wikipedia. (We redirect users to this page after registration and also provide various other guiders to help users find this page.)

This page contains a number of interactive components, one of which is the suggested edits module. That module is responsible for suggesting tasks to the user based on their topic interests, as well as what type of editing (copyediting, adding references, etc.) they are interested to do.

In this project, we added a new suggested edit type, “image suggestions”, and opted-in an experiment group on a few wikis.

Here’s a screenshot where a user sees that the unillustrated “Rembetiko” article on Czech Wikipedia has a candidate image suggestion:

But how do we know to present this specific article to the user?

Image suggestion pipeline

The Data Engineering, Data Platform, Search Platform and Structured Data teams built a data pipeline1 that is responsible for:

- generating a dataset of articles with no images

- recommending images for unillustrated articles based on images in other Wikimedia projects that are connected to those articles via Wikidata

- updating the ElasticSearch index using a weighted tag for

recommendation.image/exists|1 - exporting the dataset to Cassandra

A diagram of the pipeline:

The end result is that the search index can tell us which articles have image suggestions, and we can get the resulting data from Cassandra.

The pipeline configuration also allows for additional workflows, for example, sending notifications to experienced users about adding suggested images to articles they have on their watchlist or have recently edited2.

Help from the community

The pipeline wouldn’t be so useful if the results were unusable across the many languages that Wikipedia supports. For that, we relied heavily on Wikimedia Foundation’s Product Ambassadors, who helped review algorithm output in Arabic, Bengali, Czech, French, and Spanish, leading to improvements to the algorithm before we deployed to production.

Integrating search

CirrusSearch, the search extension we use for integrating with ElasticSearch, allows extensions to register search keywords. Our team’s extension, GrowthExperiments, registers a keyword for hasrecommendation:image, which filters for results using the weighted tag I mentioned above.

We also don’t want to suggest articles that have infoboxes, as that provides some additional complications for adding an image correctly. So we also use our extension’s -hastemplatecollection:<collection> search feature to filter out articles that use infobox templates. These templates are defined on-wiki by the community, using the Special:EditGrowthConfig feature.

From the user side, users are able to adjust their filters (also translated to ElasticSearch queries) so that they can find image suggestions for particular topics, like “Music” or “Africa”.

The search results are then transformed into Task objects that are placed into a TaskSet object that is cached for each user, for performance.

Loading the image suggestion data

As noted above, the search index just tells us which articles have image suggestions, but it doesn’t contain the metadata itself. For that, we need to access the Cassandra dataset.

The Platform Engineering Team built a light-weight HTTP service as a frontend to the Cassandra dataset (code). That service is deployed via Wikimedia’s Kubernetes self-service deployment deployment.3

In production, our code can do requests like curl -H 'Accept: application/json' 'http://localhost:6030/public/image_suggestions/suggestions/<wiki id>/<page id>' to get image suggestion data for a specific article on a wiki:

{

"rows": [

{

"wiki": "cswiki",

"page_id": 311675,

"id": "644c90bc-ba40-11ec-ba4c-f0d4e2e69820",

"image": "Anestis_Delias_rebetiko_musician_about_1933.jpg",

"confidence": 80,

"found_on": null,

"kind": [

"istype-commons-category"

],

"origin_wiki": "commonswiki",

"page_rev": 20265888

},

...

The API and access to Cassandra is pretty fast, but for improved reliability and performance for our users, we end up caching this data. The workflow is:

- User triggers an event to update their cached task set (changing task / topic filters on Special:Homepage).

- Listener code runs in a deferred update and fetches image suggestion data for each image suggestion task in the task set.

- The image suggestion data is placed in a cache.

Displaying the image suggestion to the user

After the user clicks or taps on the image suggestion card on /wiki/Special:Homepage, we take them to the article. On the server-side, we fetch the image suggestion metadata from the cache.

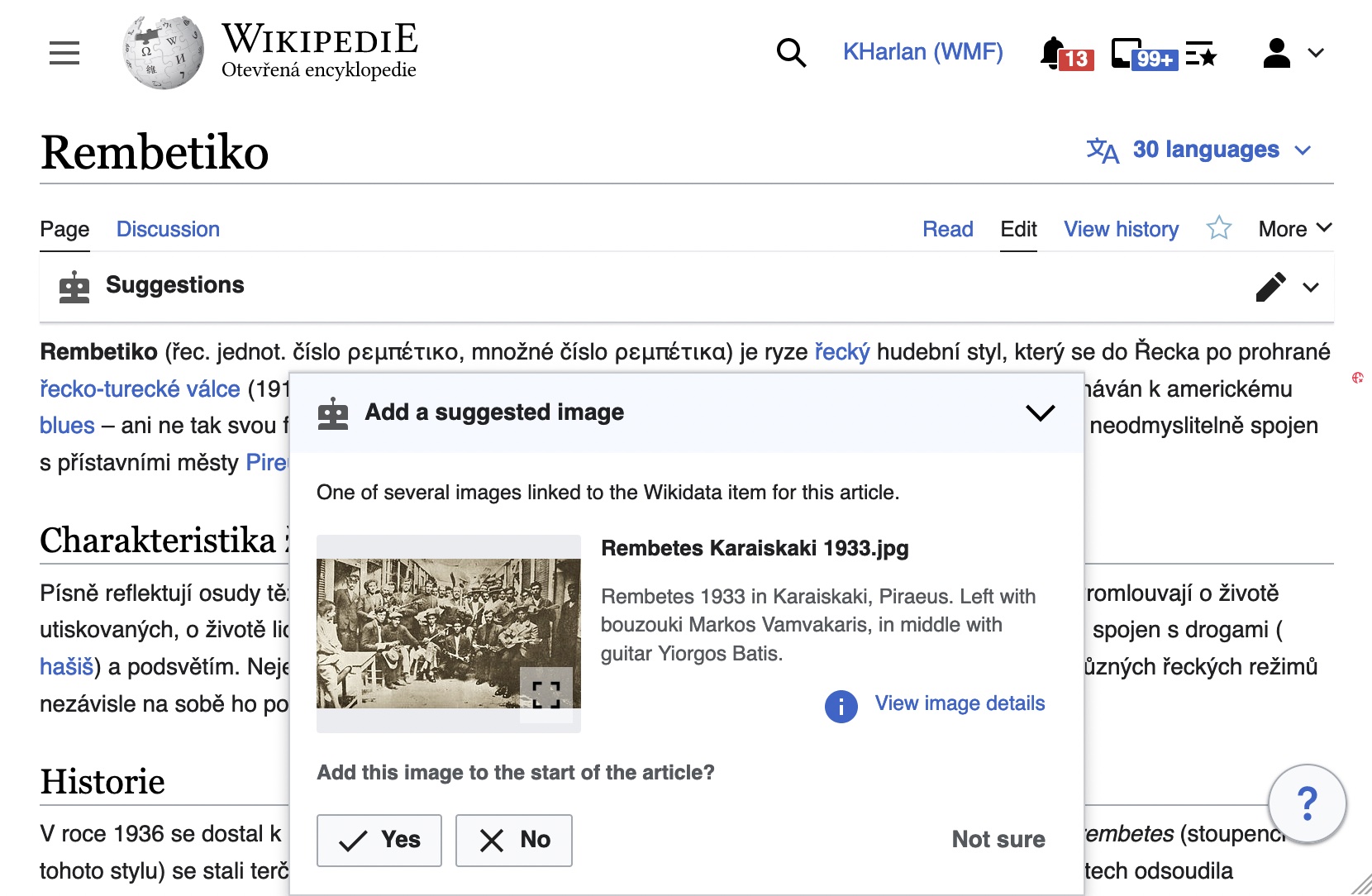

Then we load the AddImage plugin for VisualEditor:

Our plugin disables most of VisualEditor’s features to make the workflow as simple as possible for new users. (We do provide an escape hatch if the user decides they’d rather edit the “normal” way.)

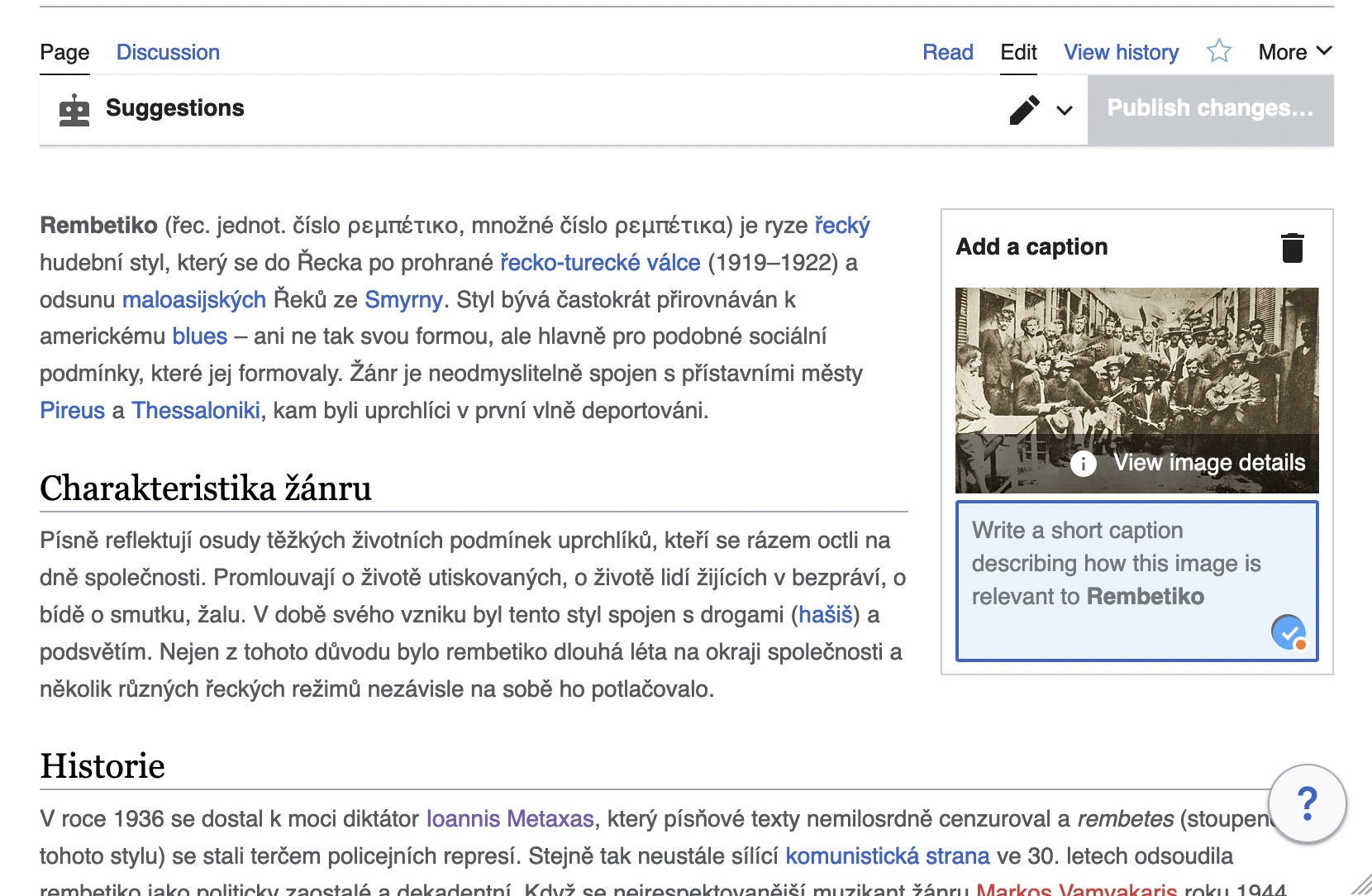

The user can view image details to help them decide if it’s a good fit for the article. If they press “Yes”, we prompt them to write a caption (and again the user has access to image details to facilitate writing a good caption):

If the user rejects the image, we gather some information as to why it was a poor fit, and we can use that data to help us tune the data pipeline.

Either way, the last step in the process is to emit an event to the EventGate service, so that the data pipeline scripts can know if a given suggestion should be ignored for future runs. Our code also takes care to invalidate the relevant entries to the search index as needed.

Moderation

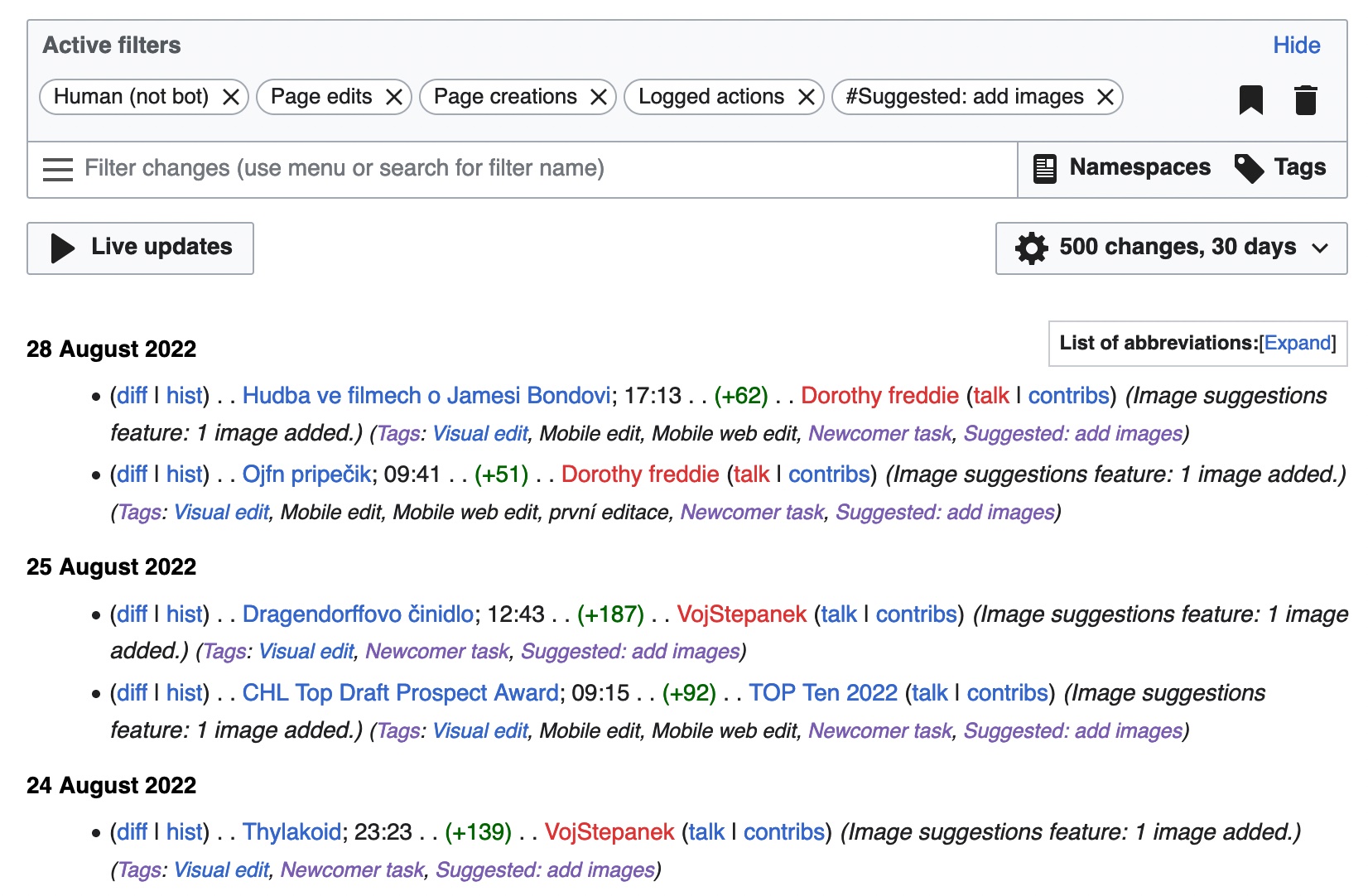

After the user saves an edit, we tag the edit with the Suggested: add images tag, which allows experienced editors and mentors to use the /wiki/Special:RecentChanges interface to keep an eye on the edit quality:

That’s it!

Now you know how the image suggestion feature works! This was a large project involving contributions across many teams (Android, Design, GLAM, Growth, Platform, Research, Search, SRE, Structured Data). The project is still relatively new, and we are planning to iterate on the infrastructure and user-facing features.

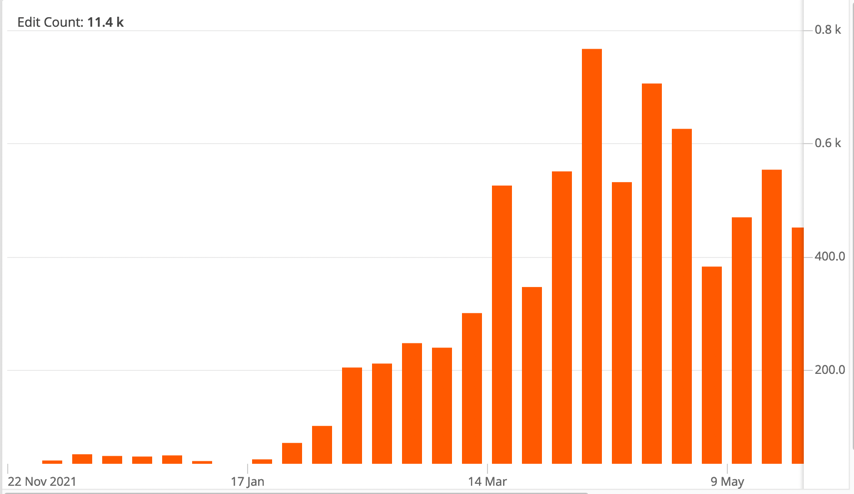

So far we’ve seen ~11,000 edits using the feature, and we expect to see this number increase as we improve the feature and roll it out to more wikis:

If this is type of work is interesting to you, my team is hiring!

For this and subsequent explanations, the canonical documentation is on Wikitech ↩︎

See [EPIC] Image Suggestions Notifications for More Experienced Contributors for the tasks involved in this work. ↩︎

There’s no publicly available service yet (phab:T306349). Due to the lack of public access, we had to do some workarounds with SSH tunnels to develop more productively from our local workstations ↩︎